- About us

- Contact us: +1.641.472.4480, hfi@humanfactors.com

Cool stuff and UX resources

Introduction

Our commercial culture has amazing twists.

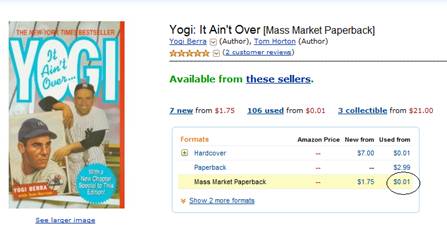

At Amazon.com, for a mere one cent you can purchase a book by Yogi Berra, the baseball catcher who made good with "Yogiisms" like "It ain't over 'til it's over."

This popular philosophical quote also happens to be the title of Berra's autobiographical 1989 book covering his tectonic ups and downs in major league baseball.

So what's the amazing twist on a one cent book?

Well, until I wrote this article, I used to believe the twist was the $3.99 shipping charge that allowed the vendor to make a few cents from even cheaper shipping.

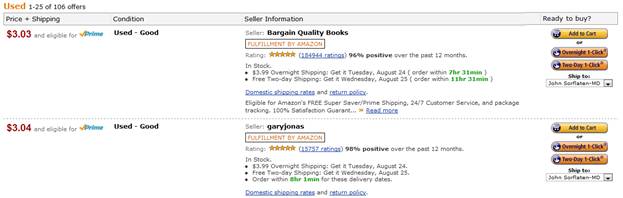

However, looking at the Used Book listing for Yogi's title, I just saw that effectively, I can purchase his book for a "negative $.96"!

In this latter case, the Amazon "Prime" price is only $3.03 ‚Äď meaning the vendor gave up some of their $3.99 shipping allowance to subsidize my purchase to the tune of 96 cents! Now that's an even more amazing twist.

So, "it ain't over 'til it's over" when selling books.

What about your usability test recommendations?

So ‚Äď you might think that when you write up the problems, make your design recommendations and turn them in, you've done your job. Right?

Wrong. You just forgot: "It ain't over 'til it's over."

The truth about truth

I'm sure you've had occasion to tell colleagues and managers that the source of usability problems in many cases is "ego-centric design." HFI publicizes this truth with a famous button passed out at courses: "Know Thy Users, For They Are Not You."

But, we might ask the same question about whether we, world-wise and experienced designers, have avoided "ego-centric re-design" when recommending solutions to usability problems.

We report the "truth" about an application's usability faults. But how well do we frame our re-design recommendations so they are truly usable for our readers?

How truthfully can others interpret our recommendations?

This is the question asked by a trio of usability authors, each well-known in their own right: Rolf Molich, Robin Jeffries and Joe Dumas. Their 2007 study is titled "Making Usability Recommendations Useful and Usable."

They examined how well 17 teams of usability professionals wrote up usability evaluation reports of a hotel reservation system in use by hundreds of hotels.

Each team had 1 to 5 members. Each team had an average of 1.6 persons and 5 to 40 years combined usability experience within each team. Not a bad collection of skills.

However, our trio of researchers found that 17% of the redesign recommendations from the teams "were not useful at all." 19% of the redesign recommendations "were not usable at all." And only 17% of the redesign recommendations "were both useful and usable."

The authors report: "Quality problems include recommendations that are vague or not actionable, and ones that may not improve the overall usability of the application."

What went wrong? How many of us thought that the hard work was the evaluation, not the write-up? Is writing usability evaluations a risky endeavor? What do you think, now?

Let's see why "it ain't over 'til it's over," next.

Evaluating usability recommendations

We'll jump to the crux of the study: How did the authors evaluate the usability recommendations written by those 17 teams? (We'll get to other details later.)

1. The authors found 81 usability problems that were identified by at least 10 out of the 17 evaluation teams. This "consensus" among teams allowed the authors freedom from defending the definition of "usability problem."

2. The authors developed a 5-point scale for usefulness and usability. They independently rated a "training set" of usability recommendations and then compared their ratings.

They ended their training period after reaching agreement on 89% of their trial evaluations.

3. Their evaluation scales follow. Instructions to the participants stated that recommendations should be short. I give you the essence of the authors' comments.

The gold standard for useful and usable recommendations (give it a "5"!)

A useful recommendation ‚Äď "an effective idea for solving the usability problem." The description does not contain any bad suggestions. The quality of the description is not considered as long as the idea is comprehensible "... ("quality" gets considered in the usability rating). |

A usable recommendation ‚Äď "communicates precisely and in reasonable detail what the product team should do to implement the idea behind the recommendation." This follows evaluation of usefulness, but is independent of whether the idea is useful. "A recommendation that is not considered useful at all may thus still be fully usable and vice versa." |

5 ‚Äď Fully useful: Meets the above criteria. "As good as it's going to get" given a request for a short description. |

5 ‚Äď Fully usable: Ditto. |

Example: |

|

Usefulness: 5.0 |

Usability: 5.0 |

Recommendation that received these ratings: "Provide field labels next to, not within the fields... Do not present everything all on one page. Although this is a main feature of the system, it reduces the overall effectiveness by forcing too much on a limited screen space. Place the calendar and room selector on the same page, with the logic to calculate the cost (fully itemized to show taxes etc) based on selections. Use a "Proceed to booking' button to go to a second page for capturing name card info etc, and transferring the cost, room type & date information." |

|

Usefulness: 1.0 |

Usability: 5.0 |

Recommendation that received these ratings: "...centering the labels might allow the cursor position to be noticed more quickly." Rater comment: "Although [this] recommendation is fully usable (there is no doubt as to what should be done), we were not convinced that centering the labels would actually improve the usability of the application." |

|

Would you agree on these definitions of "useful" and "usable"? It's not unlike your Literature teacher giving you a double grade: an F for Thoughtfulness (like "usefulness") and an A for Composition (like "usability"). This means your teacher hated the ideas, but loved your spelling, punctuation, and sentence construction!

The other ratings

4 ‚Äď Useful recommendation: "an effective idea [but with]... minor flaws, omissions, or bad elements that may influence the usability of the resulting solution." |

4 ‚Äď Usable recommendation: "communicates precisely and in some detail what the product team should do. Minor details are missing; this may influence the usability of the resulting solution." |

3 ‚Äď Partly useful: "the recommendation also leaves significant parts of the problem unaddressed... Contains roughly equal magnitudes of good and bad ideas, when it would solve the problem only for approximately half the users, or when the idea could introduce new usability problems." |

3 ‚Äď Partly usable: "communicates some information about what the product team should do. The recommendation leaves some important decisions regarding the implementation... to the product team. This could introduce new usability problems in the solution." |

2 ‚Äď A few useful elements: "an idea that would solve only a minor part of the problem, or... only for a minor group of users." Or, "when part of the description is so vague that the usefulness of the idea is doubtful..." |

2 ‚Äď Mostly unusable: "most of the description is vague, unclear or difficult to understand...; Leaves many important decisions regarding the implementation of the solution to the product team." |

1 ‚Äď Not useful: "...might even decrease the usefulness of the product... vague or unclear..." |

1 ‚Äď Unusable: "...totally vague, unclear, or incomprehensible for the product team." |

I think we get the idea: "3" is the middle score: half good and half, well, just plain bad. Gee. Guess we better get a better score than 3 or else get another career. Clearly, a 3 is a make or break score!

A final contrast of scores

Let's see some examples of tough love from our trio of authors...

Example: |

|

Usefulness: 5.0 |

Usability: 4.3 |

Recommendation that received these ratings: "People are used to providing the card type along with the number and expiration date. It is not widely known that card type is redundant. To make this display fit the mental model of the user, it would be good if the icon reacted like buttons. These "buttons" need not work on the back side but would help the user." |

|

Usefulness: 5.0 |

Usability: 1.0 |

Recommendation that received these ratings: "Some users may be inclined to click on the credit card icons to specify which card they are using. Suggested solution: Change the visual presentation to discourage this unnecessary behavior" Rater comment: "This advice is vague [and thus not usable]. Several teams were vague about how the appearance of a selected icon would be different from a non-selected one. |

|

Usefulness: 1.3 |

Usability: 5.0 |

Recommendation that received these ratings: "The icons appear to serve no purpose. If this is the case, they should be removed so as to avoid any confusion." Rater comment: "...incorrect. The icons inform users of which credit cards are accepted by the hotel. On the other hand, the recommendation ("remove icons") is very precise and actionable." |

|

Whew. If you can't stand the heat, get out of the kitchen, right?

Be explicit ‚Äď say what you mean

The authors found that teams took seriously the challenge of offering recommendations. Teams offered suggestions 96% of the time across their 81 problems.

However, the authors found that 16% of the time teams failed to use the word "Recommendation" or equivalent. Thus, their changes failed to capture attention. As the authors comment: "Implicit recommendations often sound like complaints or unprocessed observations of test participant difficulties..."

Recommendation: use the word "Recommendation" when making a recommendation (!) (Explicate explicitly!)

The humbling details

Our authors summarized their findings by defining "high-quality" recommendations as 4.0 and above for both usefulness and usability.

What percentage of the 81 recommendations would you guess met that modest criterion?

Well, only 17% of the 81 recommendations (14 of them) could be called both "Useful" and "Usable" or better.

The authors point out more pain among the teams. They point out that only 42% of the 81 recommendations (34) could be called both "Partly Useful" and "Partly Usable" (3.0 or better).

Can we conclude that it's tough to write right?

Well, that's the point of this study. Yes, it is tough to write good recommendations.

It ain't over 'til it's over: how to write right

Hopefully, you've learned that testing is not the hardest part. It's the quality of your recommendations that tips the scales.

Are you motivated, now? Here's advice from our three authors.

1. Check your work for vague-uity.

Vague and vacuous recommendations rise when we write in a rush. Solution: have another person check for vague, unclear recommendations. Or at least read your own work after giving it a rest. Make sure to have clear context for your recommendations.

2. Avoid solutions that create other problems elsewhere.

Base your recommendations on data ‚Äď either from usability test findings, standards that have been proven through use, or proven "best practices." The authors advise: avoid "unsubstantiated opinions."

3. Beware of business or technical constraints.

Show that you understand the business issues before recommending that they be rejected. For example, a logo may have a long-standing claim to a certain position on the web site. Be sure you negotiate all the pros and cons before overturning accepted practices.

4. Be sure to test sweeping changes.

You can't expect to predict all the outcomes of all your recommendations. Let your readers know ahead of time which recommendations need further testing.

5. Be specific. Be clear. This makes your writing "usable."

Show examples to shut out vagueness. For example: writing, "change the visual presentation [of the credit card icons] to discourage unnecessary clicks," fails to specify what kind of change is best. Don't expect other people to be brilliant when you can't do it yourself.

Where Yogi Berra said his thing...

The Yogiism, "It ain't over 'til it's over," was conceived, born, and delivered on July 1973.

Yogi Berra's Mets trailed the Chicago Cubs by 9/12 games in the National League East. With Berra's inspiration, the Mets rallied to win the division title on the final day of the season.

References

Molich, Rolf; Jeffries, Robin; Dumas, Joseph. 2007. Making Usability Recommendations Useful and Usable. Journal of Usability Studies, 2 (4), 162-179.

Yogi Berra. Downloaded 24 Aug, 2010 from Wikipedia.

Message from the CEO, Dr. Eric Schaffer ‚ÄĒ The Pragmatic Ergonomist

Leave a comment here

Reader comments

Chris Bean

Towers Watson

Lack of common terminology between groups is a big problem, remembering to dumb down from time to time, pays dividend.

Elizabeth Spiegel

If you want clear communication (in this case, usable recommendations), employ an editor.

Qurie de Berk

Plag websucces

Subscribe

Sign up to get our Newsletter delivered straight to your inbox

Privacy policy

Reviewed: 18 Mar 2014

This Privacy Policy governs the manner in which Human Factors International, Inc., an Iowa corporation (‚ÄúHFI‚ÄĚ) collects, uses, maintains and discloses information collected from users (each, a ‚ÄúUser‚ÄĚ) of its humanfactors.com website and any derivative or affiliated websites on which this Privacy Policy is posted (collectively, the ‚ÄúWebsite‚ÄĚ). HFI reserves the right, at its discretion, to change, modify, add or remove portions of this Privacy Policy at any time by posting such changes to this page. You understand that you have the affirmative obligation to check this Privacy Policy periodically for changes, and you hereby agree to periodically review this Privacy Policy for such changes. The continued use of the Website following the posting of changes to this Privacy Policy constitutes an acceptance of those changes.

Cookies

HFI may use ‚Äúcookies‚ÄĚ or ‚Äúweb beacons‚ÄĚ to track how Users use the Website. A cookie is a piece of software that a web server can store on Users‚Äô PCs and use to identify Users should they visit the Website again. Users may adjust their web browser software if they do not wish to accept cookies. To withdraw your consent after accepting a cookie, delete the cookie from your computer.

Privacy

HFI believes that every User should know how it utilizes the information collected from Users. The Website is not directed at children under 13 years of age, and HFI does not knowingly collect personally identifiable information from children under 13 years of age online. Please note that the Website may contain links to other websites. These linked sites may not be operated or controlled by HFI. HFI is not responsible for the privacy practices of these or any other websites, and you access these websites entirely at your own risk. HFI recommends that you review the privacy practices of any other websites that you choose to visit.

HFI is based, and this website is hosted, in the United States of America. If User is from the European Union or other regions of the world with laws governing data collection and use that may differ from U.S. law and User is registering an account on the Website, visiting the Website, purchasing products or services from HFI or the Website, or otherwise using the Website, please note that any personally identifiable information that User provides to HFI will be transferred to the United States. Any such personally identifiable information provided will be processed and stored in the United States by HFI or a service provider acting on its behalf. By providing your personally identifiable information, User hereby specifically and expressly consents to such transfer and processing and the uses and disclosures set forth herein.

In the course of its business, HFI may perform expert reviews, usability testing, and other consulting work where personal privacy is a concern. HFI believes in the importance of protecting personal information, and may use measures to provide this protection, including, but not limited to, using consent forms for participants or ‚Äúdummy‚ÄĚ test data.

The Information HFI Collects

Users browsing the Website without registering an account or affirmatively providing personally identifiable information to HFI do so anonymously. Otherwise, HFI may collect personally identifiable information from Users in a variety of ways. Personally identifiable information may include, without limitation, (i)contact data (such as a User’s name, mailing and e-mail addresses, and phone number); (ii)demographic data (such as a User’s zip code, age and income); (iii) financial information collected to process purchases made from HFI via the Website or otherwise (such as credit card, debit card or other payment information); (iv) other information requested during the account registration process; and (v) other information requested by our service vendors in order to provide their services. If a User communicates with HFI by e-mail or otherwise, posts messages to any forums, completes online forms, surveys or entries or otherwise interacts with or uses the features on the Website, any information provided in such communications may be collected by HFI. HFI may also collect information about how Users use the Website, for example, by tracking the number of unique views received by the pages of the Website, or the domains and IP addresses from which Users originate. While not all of the information that HFI collects from Users is personally identifiable, it may be associated with personally identifiable information that Users provide HFI through the Website or otherwise. HFI may provide ways that the User can opt out of receiving certain information from HFI. If the User opts out of certain services, User information may still be collected for those services to which the User elects to subscribe. For those elected services, this Privacy Policy will apply.

How HFI Uses Information

HFI may use personally identifiable information collected through the Website for the specific purposes for which the information was collected, to process purchases and sales of products or services offered via the Website if any, to contact Users regarding products and services offered by HFI, its parent, subsidiary and other related companies in order to otherwise to enhance Users’ experience with HFI. HFI may also use information collected through the Website for research regarding the effectiveness of the Website and the business planning, marketing, advertising and sales efforts of HFI. HFI does not sell any User information under any circumstances.

Disclosure of Information

HFI may disclose personally identifiable information collected from Users to its parent, subsidiary and other related companies to use the information for the purposes outlined above, as necessary to provide the services offered by HFI and to provide the Website itself, and for the specific purposes for which the information was collected. HFI may disclose personally identifiable information at the request of law enforcement or governmental agencies or in response to subpoenas, court orders or other legal process, to establish, protect or exercise HFI’s legal or other rights or to defend against a legal claim or as otherwise required or allowed by law. HFI may disclose personally identifiable information in order to protect the rights, property or safety of a User or any other person. HFI may disclose personally identifiable information to investigate or prevent a violation by User of any contractual or other relationship with HFI or the perpetration of any illegal or harmful activity. HFI may also disclose aggregate, anonymous data based on information collected from Users to investors and potential partners. Finally, HFI may disclose or transfer personally identifiable information collected from Users in connection with or in contemplation of a sale of its assets or business or a merger, consolidation or other reorganization of its business.

Personal Information as Provided by User

If a User includes such User’s personally identifiable information as part of the User posting to the Website, such information may be made available to any parties using the Website. HFI does not edit or otherwise remove such information from User information before it is posted on the Website. If a User does not wish to have such User’s personally identifiable information made available in this manner, such User must remove any such information before posting. HFI is not liable for any damages caused or incurred due to personally identifiable information made available in the foregoing manners. For example, a User posts on an HFI-administered forum would be considered Personal Information as provided by User and subject to the terms of this section.

Security of Information

Information about Users that is maintained on HFI’s systems or those of its service providers is protected using industry standard security measures. However, no security measures are perfect or impenetrable, and HFI cannot guarantee that the information submitted to, maintained on or transmitted from its systems will be completely secure. HFI is not responsible for the circumvention of any privacy settings or security measures relating to the Website by any Users or third parties.

Correcting, Updating, Accessing or Removing Personal Information

If a User’s personally identifiable information changes, or if a User no longer desires to receive non-account specific information from HFI, HFI will endeavor to provide a way to correct, update and/or remove that User’s previously-provided personal data. This can be done by emailing a request to HFI at hfi@humanfactors.com. Additionally, you may request access to the personally identifiable information as collected by HFI by sending a request to HFI as set forth above. Please note that in certain circumstances, HFI may not be able to completely remove a User’s information from its systems. For example, HFI may retain a User’s personal information for legitimate business purposes, if it may be necessary to prevent fraud or future abuse, for account recovery purposes, if required by law or as retained in HFI’s data backup systems or cached or archived pages. All retained personally identifiable information will continue to be subject to the terms of the Privacy Policy to which the User has previously agreed.

Contacting HFI

If you have any questions or comments about this Privacy Policy, you may contact HFI via any of the following methods:

Human Factors International, Inc.

PO Box 2020

1680 highway 1, STE 3600

Fairfield IA 52556

hfi@humanfactors.com

(800) 242-4480

Terms and Conditions for Public Training Courses

Reviewed: 18 Mar 2014

Cancellation of Course by HFI

HFI reserves the right to cancel any course up to 14 (fourteen) days prior to the first day of the course. Registrants will be promptly notified and will receive a full refund or be transferred to the equivalent class of their choice within a 12-month period. HFI is not responsible for travel expenses or any costs that may be incurred as a result of cancellations.

Cancellation of Course by Participants (All regions except India)

$100 processing fee if cancelling within two weeks of course start date.

Cancellation / Transfer by Participants (India)

4 Pack + Exam registration: Rs. 10,000 per participant processing fee (to be paid by the participant) if cancelling or transferring the course (4 Pack-CUA/CXA) registration before three weeks from the course start date. No refund or carry forward of the course fees if cancelling or transferring the course registration within three weeks before the course start date.

Cancellation / Transfer by Participants (Online Courses)

$100 processing fee if cancelling within two weeks of course start date. No cancellations or refunds less than two weeks prior to the first course start date.

Individual Modules: Rs. 3,000 per participant ‚Äėper module‚Äô processing fee (to be paid by the participant) if cancelling or transferring the course (any Individual HFI course) registration before three weeks from the course start date. No refund or carry forward of the course fees if cancelling or transferring the course registration within three weeks before the course start date.

Exam: Rs. 3,000 per participant processing fee (to be paid by the participant) if cancelling or transferring the pre agreed CUA/CXA exam date before three weeks from the examination date. No refund or carry forward of the exam fees if requesting/cancelling or transferring the CUA/CXA exam within three weeks before the examination date.

No Recording Permitted

There will be no audio or video recording allowed in class. Students who have any disability that might affect their performance in this class are encouraged to speak with the instructor at the beginning of the class.

Course Materials Copyright

The course and training materials and all other handouts provided by HFI during the course are published, copyrighted works proprietary and owned exclusively by HFI. The course participant does not acquire title nor ownership rights in any of these materials. Further the course participant agrees not to reproduce, modify, and/or convert to electronic format (i.e., softcopy) any of the materials received from or provided by HFI. The materials provided in the class are for the sole use of the class participant. HFI does not provide the materials in electronic format to the participants in public or onsite courses.